AirFlow Remote Logging Using S3 Object Storage

Apache Airflow is an open source workflow management tool that provides users with a system to create, schedule, and monitor workflows. It is composed of libraries for creating complex data pipelines (expressed as directed acrylic graphs, also referred to as DAGs), tools for running and monitoring jobs, a web application which provides a user interface and REST API, and a rich set of command-line utilities.

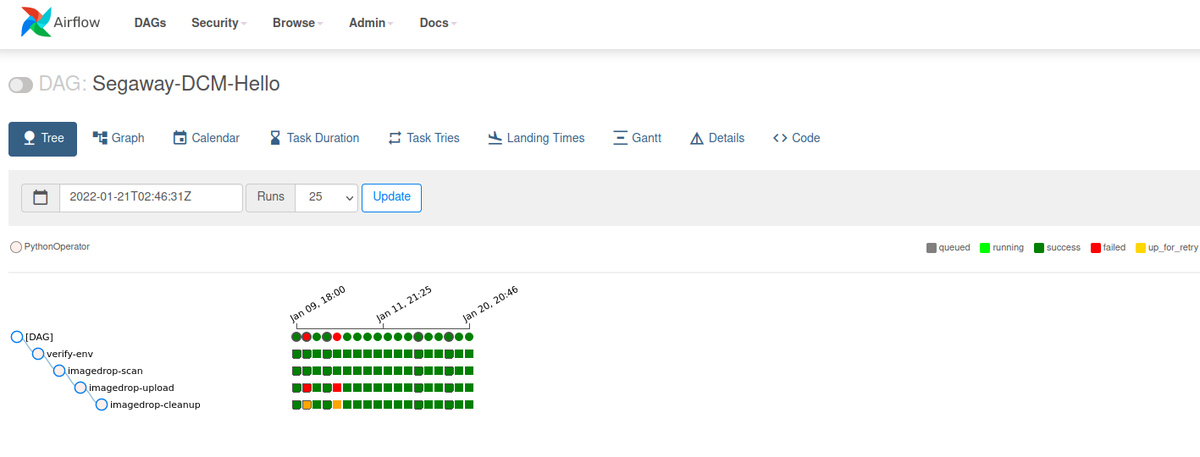

Since data pipelines are commonly run without manual supervision, ensuring that they are executing correctly is important. Airflow provides two important components that work in concert to launch and monitor jobs: the worker processes that execute DAGS, and a scheduler that monitors a task's history and determines when it needs to be run. These systems are often deployed together on a single server or virtual machine. Details about a job run are stored in text files on the worker node, and can be retrieved either by direct inspection of the file system or via the Airflow web application. Accessing logs is important for diagnosis of issues which may occur in the process of running pipelines.

If Airflow is deployed in a distributed manner on a cloud or container platform such as Kubernetes or Docker, however, the default file-based logging mechanisms can result in data loss. This happens for two important reasons:

- container instances are "ephemeral" and when they restart or are rescheduled, they lose any files or runtime data that was not part of the original "container image"

- because they are considered expendable, container orchestration systems like Kubernetes resolve issues with a instance by removing or restarting it

To support as many different platforms as possible, Airflow has a variety of "remote logging" plugins that can be used to help mitigate these challenges. In this article, we'll look at how to configure remote task logging to an object storage which supports the Simple Storage Solution (S3) protocol. The same instructions can be used for the Amazon Web Services (AWS) S3 solution or for a self-hosted tool such as MinIO.

Configuring Remote Logging in Airflow

To configure remote logging within Airflow:

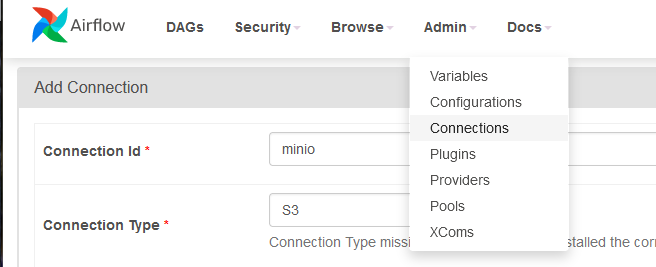

- An Airflow Connection needs to be created to the object storage system where the data will be stored. Connections in Airflow help to store configuration information such as hostname/port and authentication information such as username and password in a secure manner.

- Task instances need to be configured so that they will read and write their data to the S3 instance. It is possible to provided the needed configuration parameters using either the Airflow configuration file (

airflow.cfg) or via environment variables.

Step 1: Create S3 Connection

Connections are created from the "Connections" panel of the admin.

Create a new connection instance by clicking on the "Add a new record" button, which will load the connection form. Provide a "Connection ID", specify the connection type as "S3", and provide a description. Leave all other values blank, the access ID and secret will be provided using the "Extra" field. Important: Make note of the connection ID, this value will be used when configuring the worker instances.

The configuration values in the screenshot below are taken from the Oak-Tree Big Data Development Environment and can be used for Sonador application development. For additional detail, refer to "Getting Started With Sonador".

The final piece of the configuration is provided in the "Extra" box as a JSON object, and must include:

aws_access_key_id: the AWS access ID that will be used for making requests to the S3 bucketaws_secret_access_key: the secret key associated with the access ID- (optional)

endpoint_url: the connection string associated with the self-hosted S3 instance, omit if using AWS S3

The example in the listing below shows how the object should be structured:

{ "aws_access_key_id":"jupyter", "aws_secret_access_key": "jupyter@analytics", "endpoint_url": "http://object-storage:9000" }

Important: The parameters in the listing above are from the Oak-Tree development environment. Modify the values of aws_access_key_id, aws_secret_access_key, and host so that they match your object storage deployment.

Step 2: Configure Workers

Several parameters need to be provided to worker instances in order to read and write logs to S3. As noted above, configuration parameters can be provided in the Airflow configuration file airflow.cfg or passed as environment variables. If the connection instance in the previous section is not setup, or has the wrong parameters, remote logging will fail.

The following values are required:

AIRFLOW__LOGGING__REMOTE_LOGGING(config parameterremote_logging): boolean which turns on remote loggingAIRFLOW__LOGGING__REMOTE_BASE_LOG_FOLDER(remote_base_log_folder): the base path for logsAIRFLOW__LOGGING__REMOTE_LOG_CONN_ID(remote_log_conn_id): ID of the Airflow connection which should be used for retrieving credentialsAIRFLOW__LOGGING__ENCRYPT_S3_LOGS(encrypt_s3_logs): controls whether the logs should be encrypted by Airflow before being uploaded to S3

The configuration in the file below shows YAML key/value pairs that might be included in the environment section of a Kubernetes manifest or a config map:

AIRFLOW__LOGGING__REMOTE_LOGGING: "True" AIRFLOW__LOGGING__REMOTE_BASE_LOG_FOLDER: "s3://airflow-logs" AIRFLOW__LOGGING__REMOTE_LOG_CONN_ID: minio AIRFLOW__LOGGING__ENCRYPT_S3_LOGS: "False"

If providing the values in airflow.cfg, the parameters should be provided in the logging section:

[logging] # Users must supply a remote location URL (starting with either 's3://...') and an Airflow connection # id that provides access to the storage location. remote_logging = True remote_base_log_folder = s3://airflow-logs remote_log_conn_id = minio # Use server-side encryption for logs stored in S3 encrypt_s3_logs = False

Worker instances must be restarted in order for the new configuration to take effect.

Comments

Loading

No results found