Hello Kafka: Getting Acquainted With Data Streaming

Kafka is a big data streaming platform used by many enterprises to:

- Provide streams of records, similar to a message queue or enterprise messaging system, to which clients may publish data or subscribe.

- Store streams of records in a fault-tolerant, durable way so that clients may consume the streams and take action on the data.

Since its introduction at LinkedIn in 2011, Kafka has become an essential part of the Big Data landscape. Through the use of its "topics" and ecosystem of components, Kafka provides a uniform way to move data between systems, while providing the foundation for stream analytics and building applications that are able to react to data as it becomes available.

In this article, we will introduce the fundamental components of Kafka and show how to create topics, populate them with sample data, and consume the resulting streams. This article is part of a series that uses a Docker Based Big Data Laboratory that can be deployed using docker-compose. Please see Part 1 for an overview of the environment and deployment instructions.

Kafka Essentials

Kafka is a message system which can be used to build data streams. It is used to manage the flow of data and ensure that data is delivered to where it is intended to go.

- Data streams can operate upon explicit types of data: things like patients, orders, diagnosis, treatments, or orders in healthcare; or things like sales, shipments, and returns in general business. But it is not limited to that, it can also be used to model the actions and events which produce certain types of objects and results.

- Treating a data as a stream allows the creation of applications that process and respond to specific events, and in the process, trigger other important actions.

Kafka Capabilities

Kafka provides two main uses cases which make it an "application hub":

Stream Processing

Kafka enables continuous, real-time applications to react to, process and transform streams.

Data Integration

Kafka captures events and feeds those to other data systems including big data processing engines such as Spark, NoSQL (key/value systems or document stores), Object Storage (like MinIO, Ceph, Amazon S3, and OpenStack Swift), and relational databases (PostgreSQL and MySQL).

To make it effective as a streaming platform, Kafka provides:

- Real-time publication/subscription at large scale. Kafka's implementation allows for low latency, making it possible for real-time applications to leverage Kafka on time sensitive data and still operate with high throughput capacity.

- Capabilities for processing and storing data. Kafka is slightly different from other messaging applications, like RabbitMQ, in that stores data for a period of time. The storage of data persistently allows for re-playing of the data, as well as integration with batch-systems like Hadoop or Spark.

Kafka organizes its stream of records as a commit log into streams which are published as "topics".

- A topic is multi-subscriber and can be consumed by one application or many.

- "Consumers" are responsible for tracking their position, which allows for different types of consumers to process messages at their own pace.

- Consumers can be organized into "Groups" to allow for horizontal scalability of the same type of consumer.

- Each piece of data (a record) that is published to Kafka consists of a key, a value, and a timestamp. The value provided can be almost anything including semi-structured data such as JSON or Avro, or unstructured data such as plain text.

Applications access event streams by connecting to "Brokers," which store the data and respond to access requests from clients (consumers).

Applications which connect to Kafka are generally categorized into Producers and Consumers:

- Producers add messages and data to a topic

- Consumers subscribe to topics and process messages

Kafka CLI Utilities

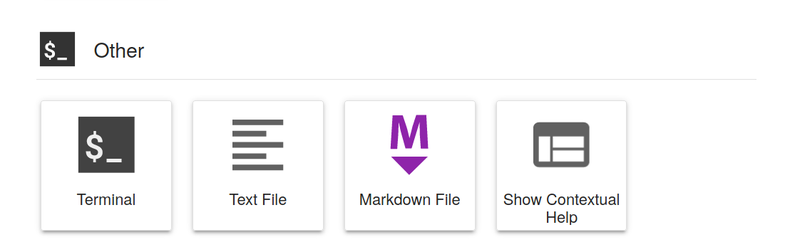

The tools and utilities in this lab are included as part of the Jupyter container in the Big Data lab. You can access them by opening a terminal instance. Terminals are opened by clicking on the "Terminal" option under the "Other" menu.

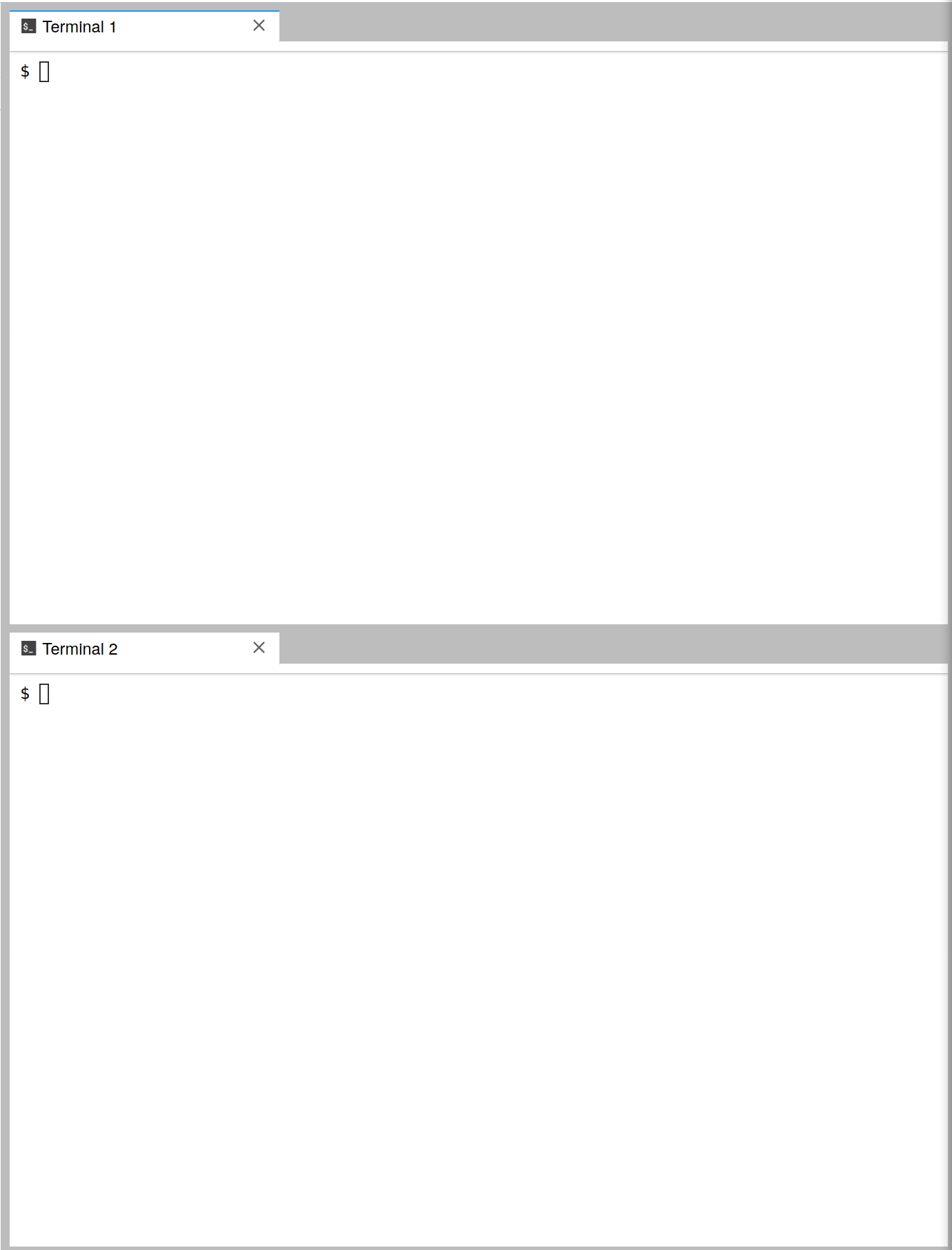

Throughout this lab, you will need to have multiple terminals open. In one terminal you will type commands to submit data, while in the other terminal you will consume data using a separate program.

Jupyter allows you to stack multiple terminals on top of one another (or side-by-side) after you have created them by clicking and dragging the terminal's tab.

All of the Kafka CLI utilities used in this article can be found in /opt/kafka/bin and include:

/opt/kafka/bin/kafka-topics.sh: the command line utility used to manage topics and their configuration/opt/kafka/bin/kafka-console-producers.sh: a CLI based producer that allows you to publish data onto a Kafka topic for testing or debugging/opt/kafka/bin/kafka-console-consumer.sh: a CLI based consumer that allows you to consume the messages of a topic for testing or debugging

Producers and Consumers

1. Inspect Existing Topics

In the Kafka shell view all the topics currently defined in the cluster using kafka-topics:

$ /opt/kafka/bin/kafka-topics.sh --list --zookeeper zookeeper:2181

2. Create a New Topic

Using kafka-topics.sh, create a topic that will be used for the remainder of the lab called test.

$ /opt/kafka/bin/kafka-topics.sh --create --topic test --partitions 1 \ --replication-factor 1 --if-not-exists --zookeeper zookeeper:2181

The --replication-factor 1 option tells Kafka that the topic has a

redundancy of one (which is to say, no redundancy at all). In a production

system where retention of data is important, you would want a redundancy of two or three.

After the topic creates successfully, ensure that it is in the list by

using the kafka-topics --list command from above.

3. Consume Messages from a Topic

The kafka-console-consumer.sh tool provides a number of options to allow

you to connect to a topic and inspect the messages. From the terminal session,

start a kafka-console-consumer.sh session listening to the test topic you created earlier.

$ /opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server kafka:9092 \ --topic test --from-beginning

In the connection options, we provide a --bootstrap-server which is used by the

client to contact the cluster. The cluster will then help the client to

determine where the topic partitions that it should be listening to are

located and will facilitate connections.

4. Publish Messages to a Topic

Start a kafka-console-producer.sh instance. Once the kafka-console-producer.sh instance

starts, you will see a diamond bracket prompt (>`):

$ /opt/kafka/bin/kafka-console-producer.sh --broker-list kafka:9092 --topic test

>

kafka-console-producer.sh can be used to send plain text messages to the --topic specified.

At the prompt, enter a few messages:

> "Hello Kafka! This is my first message!"

> {"hello": "world", "payload": "This is a JSON blob!"}

> <hello-world><msg>Hello world! This is an XML document!</msg></hello-world>

Each message will be sent upon hitting the “Enter” button.

From the first window (kafka-console-consumer.sh ), watch the messages as they are

processed. Stop the consumer instance and experiment with the --offset

and --partition options to experiment with ways in which the message can be consumed.

Comments

Loading

No results found